NCAR’s Yellowstone still running supercomputing jobs and training students

The National Center for Atmospheric Research (NCAR) decommissioned its Yellowstone supercomputer at the end of 2017, according to NCAR’s supercomputing history, but that wasn’t really the end of the story. Researchers still use much of the system’s hardware and publish scientific papers about their work, and students learn about high-performance computing and move on to careers in the field.

The biggest difference is that the supercomputer is now at Stanford University's High Performance Computing Center (HPCC) instead of the NCAR-Wyoming Supercomputing Center (NWSC). While Yellowstone – yes, the name stuck – is now about a 1,200-mile road trip west of Cheyenne, Wyoming, not much else has changed, according to Steve Jones, Stanford’s HPCC director.

“Yellowstone provides tens of millions of CPU hours each month driving research and supporting our teaching and research mission at the HPC Center,” he said.

Jones and his team were in the market for affordable interconnect switches they could use for a different system when he became aware of Yellowstone’s availability in 2018. “We’re a small, academic, cost-recovery center so we don’t receive direct support from the university to procure equipment,” he said. “I was looking for something that was free to me and still in good condition. As it turns out, there were compute nodes that were of interest, as well. It was a very good platform, a stable platform, and something we could implement.”

The team submitted a successful $5 million proposal to the National Science Foundation (NSF) to acquire three Infiniband switches and, along with them, 1,080 compute nodes on 15 Yellowstone racks. Jones said working through the process of transferring ownership of the equipment from the NSF and moving it all to Stanford’s HPCC took about a year, and no small amount of effort by NCAR personnel at the NWSC.

The Yellowstone system’s compute nodes, racks, switches, cables, and even nuts and bolts (such as these shown here) were painstakingly retrieved and trucked to Stanford University for ongoing research and teaching.

Stanford University High Performance Computing Center

“After we got the approval and the actual award letter, everything went really quickly. We took whatever we could fit in a truckload,” he said. In addition to the racks and switches, the whatever included some $300,000 worth of fiber cabling and even nuts and bolts that were painstakingly retrieved from Yellowstone’s former home.

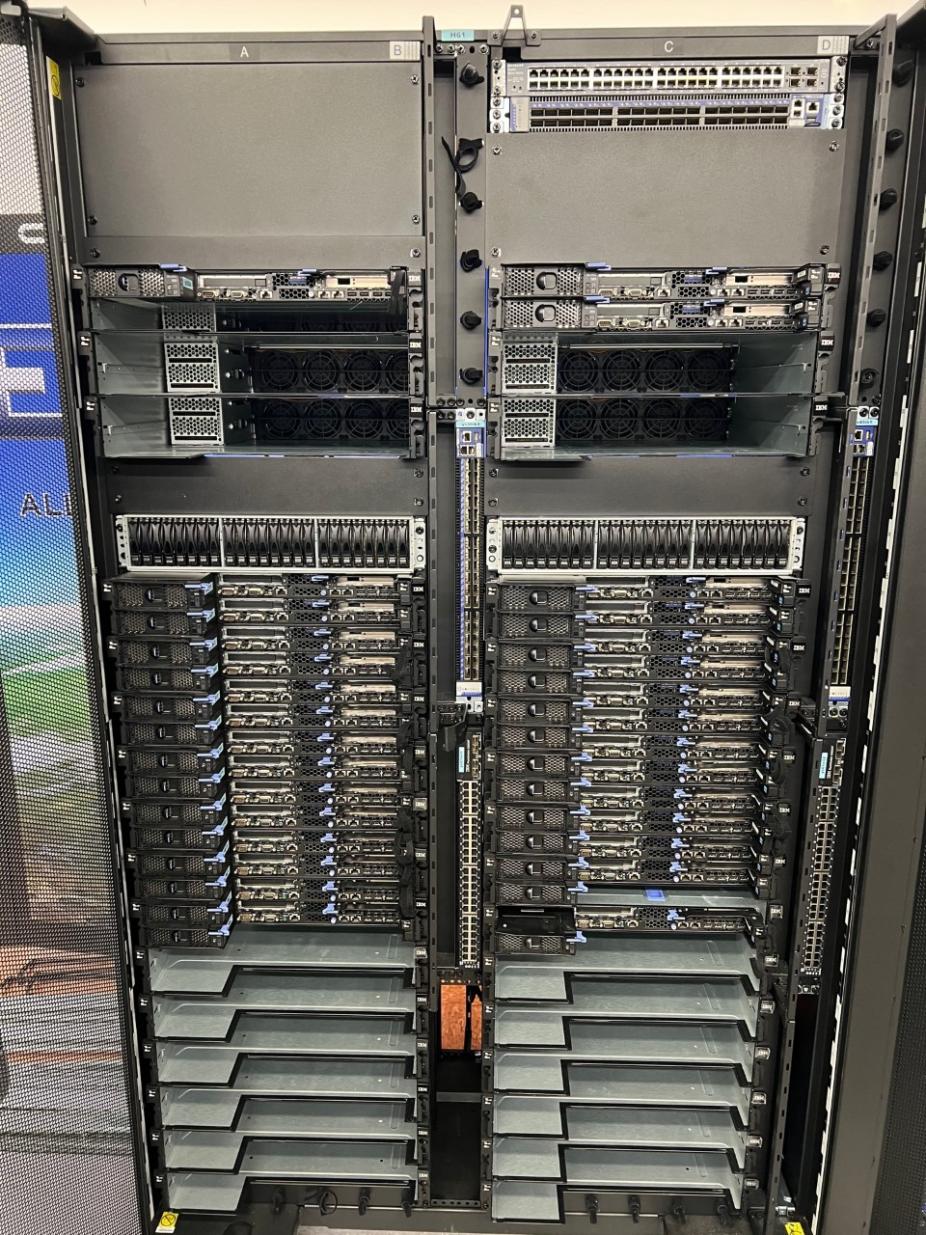

This Yellowstone rack and compute nodes with additional equipment is used in Stanford’s “ME344: Introduction to High-Performance Computing.” Instructors go to the extent of removing IB cards, memory modules, and so on, from the compute nodes. In the course, students physically build an HPC cluster from a pile of parts, assembling nodes and cabling everything together in the rack.

Stanford University High Performance Computing Center

Stanford students were involved from the beginning, getting experience in everything from writing proposals to installing equipment when it arrived on campus in 2019. Undergrads and grad students continue to learn from HPCC’s “flagship” Yellowstone system, which features 320 compute nodes. Another 380 nodes are part of other clusters, some nodes stand by as replacements when needed, and 128 additional nodes are used in separate teaching clusters. Even dead nodes are useful, Jones said, as students learn how to put HPC systems together in their classes.

Caetano Melone, a Stanford University undergrad and senior software engineer, described some of the ways the HPCC has used the Yellowstone system to support the research and workforce development mission of the center. For example, it plays an “integral role” as testing infrastructure for an HPC application being developed in the mechanical engineering department. “It provides resources for daily tests verifying code correctness and performance before users deploy their simulations on other clusters.”

He added that Yellowstone helps students learn the inner workings of supercomputing platforms. “Not only do we help administer the cluster and support our users,” he said, “but we also learn skills that we can apply in other contexts, such as internships at government agencies, industry partners, and other employers that benefit from the workforce development happening here."

Yellowstone will soon be joined by NCAR’s Cheyenne supercomputer, which is slated to be retired at the end of 2023 after seven years of service. Any organization interested in how Cheyenne hardware might be made available for their computing needs is encouraged to reach out to CISL at alloc@ucar.edu.