An interview with NSF NCAR’s Dr. Allison H. Baker, a member of the winning team for the 2024 ACM Gordon Bell Prize for Climate Modeling

by Shira Feldman

Dr. Baker discusses the prize-winning research on exascale climate emulators

NSF NCAR's and CISL's Dr. Allison Baker, a member of the team that won the 2024 ACM Gordon Bell Prize for Climate Modeling competition.

On November 21, 2024, the Association for Computing Machinery (ACM) awarded the 2024 ACM Gordon Bell Prize for Climate Modeling to a 12-member team for their project “Boosting Earth System Model Outputs and Saving PetaBytes in Their Storage Using Exascale Climate Emulators.” The award recognizes innovative parallel computing contributions toward solving the global climate crisis. The ACM made its announcement during at the Supercomputing 2024 Conference in Atlanta, GA.

The winning team, led by researchers from King Abdullah University of Science and Technology (KAUST), presented the design and implementation of an exascale climate emulator for addressing the escalating computational and storage requirements of high-resolution Earth System Model simulations.

Dr. Allison H. Baker of the Computational and Information Systems Lab (CISL) at the National Science Foundation (NSF) National Center for Atmospheric Research (NCAR) is a member of the prize-winning team. Here, Dr. Baker talks about the research, its significance, her part in it, and what it means for climate scientists.

What is a climate emulator?

Earth System models, or climate models, are complex mathematical models, and producing high-resolution simulations requires massive computational resources and petabyte-scale storage. The high computational and storage costs mean that often only a few runs are possible, even on a supercomputer. And the situation is only getting worse as the climate community pushes toward ultra-high resolution models.

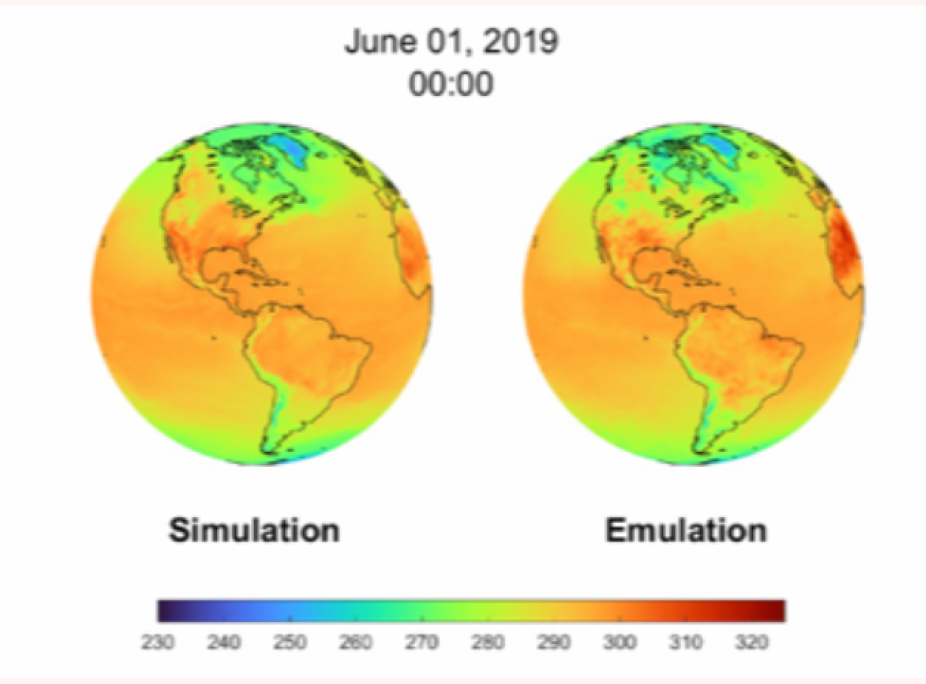

A climate emulator is a statistical model designed to mimic the behavior of a climate model. It is important to note that it is not a replacement for a climate model, but rather a complement and means of enhancing climate model simulations. In fact, a statistical emulator is trained on limited simulations from a climate model, and is then able to generate new data or predict outcomes for new inputs more cheaply than running the full climate model.

As an example, emulators are really useful in studying climate variability. The basic idea is that, in climate and weather forecasting, simulations are not typically just run once but many times because there is uncertainty involved due to the model’s sensitivity to the initial conditions. Of course, the more simulations you run (with slightly different initial conditions), the more confidence you can have in the model predictions because you can quantify the uncertainty. So if you’ve seen weather forecasting on TV, especially with predicting the path of a storm or even likely precipitation percentages, then you may have realized that they have run a model multiple times and calculated statistics on the output so they can better estimate what is likely to happen. You can imagine that especially with a high-resolution climate simulation, a single hundred-year run requires a powerful computer and lots of storage for the model output. So if someone wanted an ensemble of high-res simulations, that's a huge cost that is most likely prohibitive. The idea of these statistical climate emulators is that instead you could just do a couple of the expensive module simulations, and then use them to train your statistical emulator. After that, you could very cheaply and quickly generate many more emulations to help quantify the uncertainty in the prediction.

Simulation versus emulation. Credit: KAUST.

Basically the emulator lets you explore different scenarios without actually running the expensive climate model simulation. It's not a replacement for a climate simulation model by any means, but it's just a way to enhance the capabilities, by being able to get data in a way that's faster and with less storage than actually having to run the model again. Climate emulators are also useful for determining model sensitivity to different physical parameters and model calibration in general.

What is special about this climate emulator?

The new emulator designed and implemented by our team is really powerful because it can handle spatial and temporal resolutions that are just not possible with previous emulators. Before this work, climate emulators had a spatial resolution lower limit of 100 km. For temporal resolution, monthly and annual frequencies were most common, and daily could only be achieved with strict assumptions.

Our team’s exascale emulator can do a ~3.5km resolution at hourly frequencies—which is a huge improvement! This is nearly 500 billion data points for a year-long emulation. The Gordon Bell prize is meant to recognize innovative work that really takes advantage of modern supercomputing resources and demonstrates a huge improvement over the current state-of-the-art capabilities. So this emulator does that!

What allows this climate emulator to run at exascale?

There were a number of important technical innovations in this work that all came together to enable exascale performance, the first being the design of the statistical emulator itself. Our team’s emulator method uses spherical harmonic transforms (SHT), which allows modeling to be done in a simpler domain than the original spatial-temporal climate grid. This choice really reduces the required computational and storage costs, which allows for finer resolutions than previously possible. These transforms also make it easier to do multiple resolutions with the same emulator.

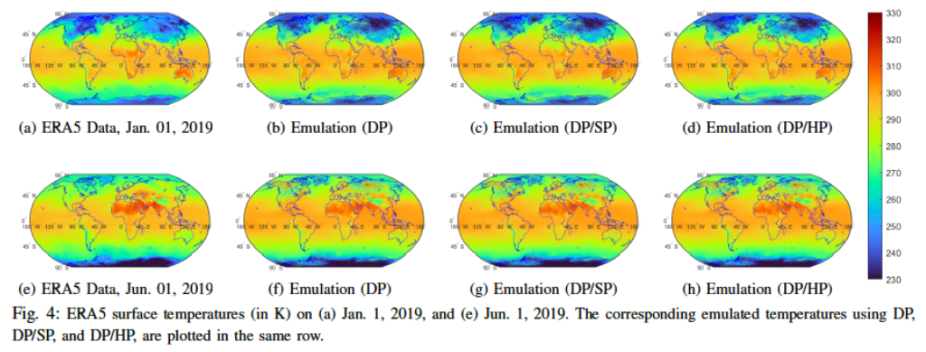

A number of algorithmic decisions were also important in achieving high performance. For example, using mixed-precision arithmetic. Most numerical climate models are computed entirely in 64-bit double-precision. Our team’s emulator is able to use lower precision (like single and even half) for some parts of the computations, which is a big performance advantage on GPU hardware. And using a runtime system is critical for efficiently executing on such large parallel systems. The PaRSEC system can dynamically schedule tasks based on available computational resources and also manage all of the data dependencies.

Finally, I would say that in general a whole lot of work went into optimizing the code to run efficiently on supercomputers with different architectures and types of GPUs.

How did you get involved with this project?

Figure 4 from the journal article, comparing two days of ERA5 surface temperature data with the corresponding emulated data using different mixed-precision options. Credit: KAUST.

Part of the reason I got involved in the first place is because of my interest and expertise on lossy data compression for climate simulation data. I collaborated with several of the team members on this team a few years ago on using a model-based stochastic approach for saving storage in climate ensembles. And while emulators are distinct from data compressors, they share the same goal of trying to reduce the massive storage burden from climate models, just from an alternative approach. And that led to getting involved with the current work. I was able to provide feedback on the impact of climate science aspects of the work, and help the team understand the significance in terms of computational and storage comparisons with climate simulations.

What are the scientific benefits or real-world applications of this research?

The scientific benefit is that you can get more information for your analysis using fewer computational resources. You don't need the storage for all the simulation output, and the emulator is fast, so it doesn’t need as many compute cycles as running a model simulation. And this more cost-effective approach will allow more climate scientists to access and work with ultra-high resolution data, which will of course help to better our understanding and prediction of the effects of our changing climate.

Is it better for the environment?

Yeah, it is definitely better for the environment. Because ironically, running a really big climate simulation takes a lot of energy and compute power. Reducing run time and getting the best performance that you can out of a machine are both really important in terms of more sustainable approach.

What are the next steps?

I think we will see more scientists using emulators to complement the information that they have from the more expensive climate model simulations. Hopefully, we will start seeing more emulators made available as part of publicly available data collections.

Congratulations to Dr. Baker and the entire winning team for the well-deserved recognition of their work! For more information on the research that went into this award-winning project, see this article from the Oak Ridge National Laboratory.